Introduction

“Hacks, Fakes, Bans, and GANs” were just four of the many topics I could have chosen for the title, but these particular ones put together exhibit a nice poetic quality. The word cloud at the top shows perhaps a more complete set, the full diversity of concerns that intersect contemporary biometrics— topics from algorithms, to cybersecurity, to policy, to artificial intelligence.

The technology behind biometrics is improving rapidly. The business of biometrics is booming. But it’s the social impact around biometric identification and use in surveillance that has captured many of the headlines lately. Trust, fairness, privacy, transparency, ethics- biometrics underlies many hot-button social issues, and this conversation is happening on a global scale.

How Did We Get Here?

Its likely we wouldn’t be talking about these social issues, if the tech behind biometrics itself hadn’t already surpassed a threshold of adoption and performance.

Mass adoption of biometric tech is already here, and growing rapidly:

♦ Many mobile device and laptop users already authenticate and unlock using some form of biometric such as fingerprint or face recognition.

♦ Airports were early adopters, and its now routine to encounter biometrics at customs. The biometrics company CLEAR employs biometrics in its kiosks at many airports. Subscribers to the service bypass the long lines at x-ray security once they are authenticated.

♦ China alone will deploy over 600 million surveillance cameras with face recognition by 2020, compared to 160 million in 2016[28].

♦ Due to economy of scale, inexpensive fingerprint sensors will start to appear on credit cards (called biometric or fingerprint cards.)

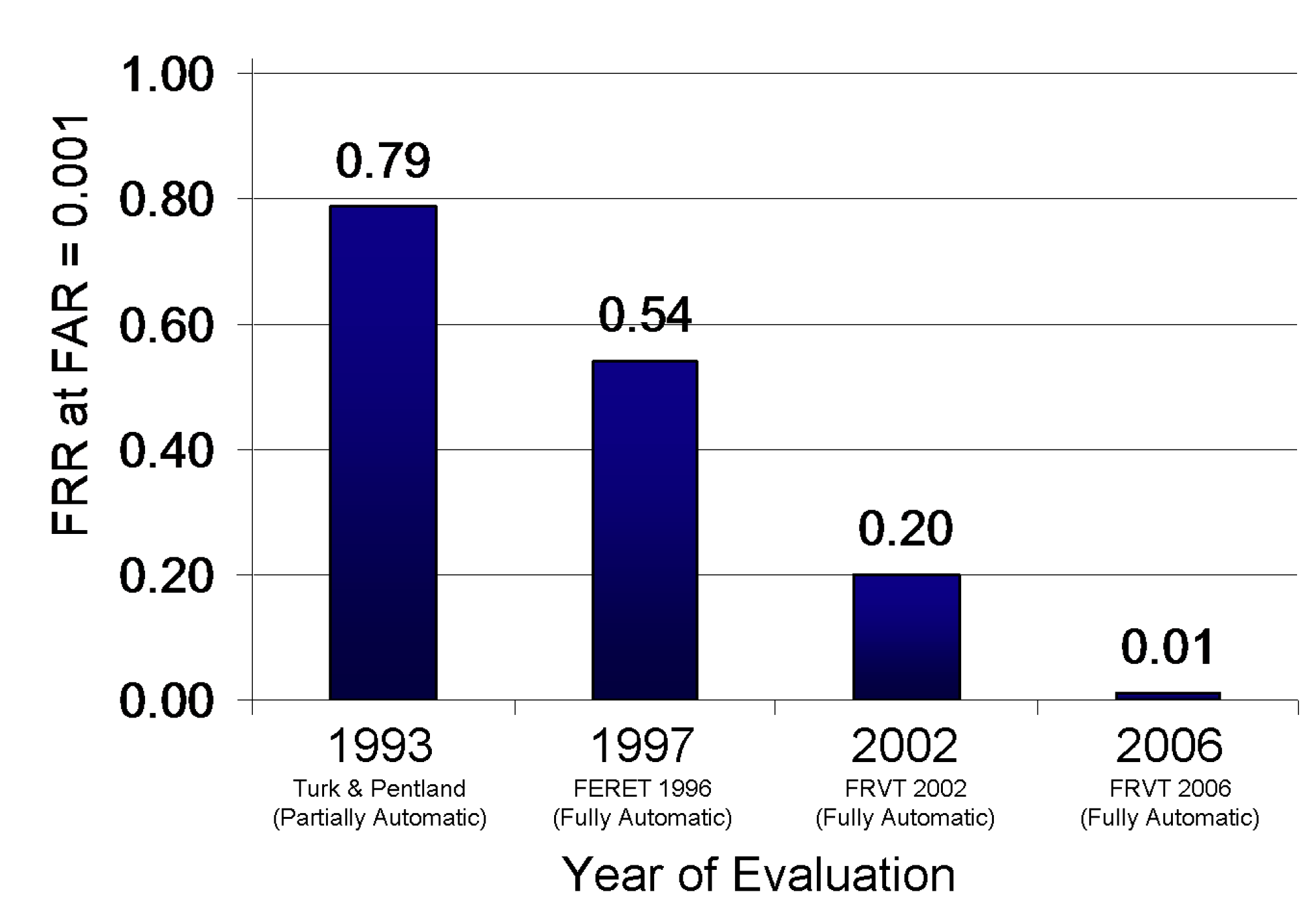

Biometrics accuracy and performance have improved dramatically. Face recognition is a great example. The graphic below shows the results of various US Government sponsored efforts to quantify the error of face recognition systems over the last few decades. Between 1993 and 2006, the error rate decreased significantly. Early systems performed poorly, but by the mid-2000s they reached a decent level of accuracy [1].

The error rate of face recognition systems reduce dramatically over the last two decades [1].

The best algorithms during this time period were not based on deep learning. That’s because neural networks weren’t yet powerful enough to beat other algorithms such as PCA, support vector machines, among others. Since then, the Deep Learning revolution has occurred and now algorithms such as convolutional neural networks (CNN) are the state-of-the-art in face recognition.

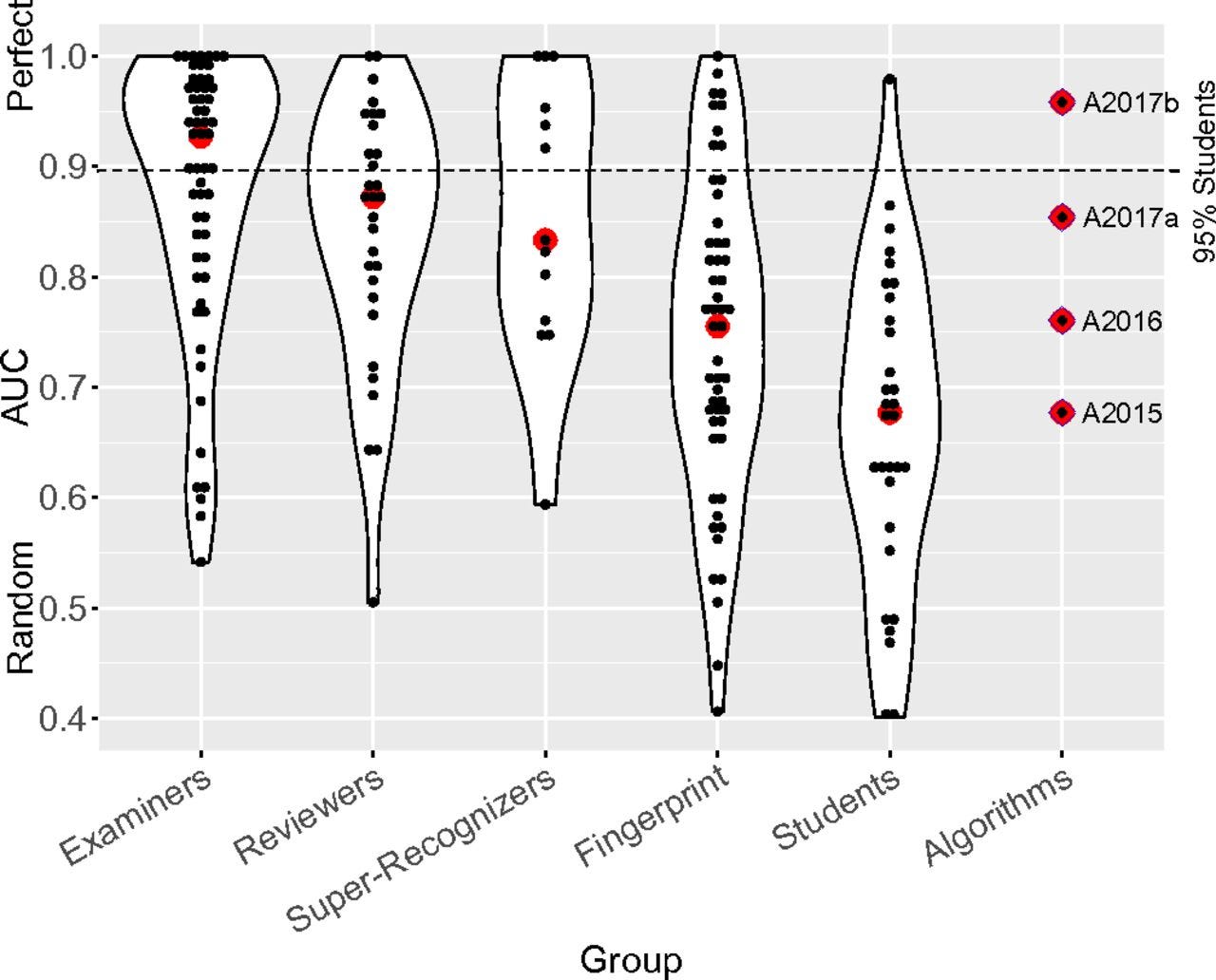

The chart below shows the evolution of CNNs for each year between 2015 to 2017 (last column in the violin plot) [2]. Not only did the performance jump significantly year-by-year, but also CNN’s surpassed human participants measured on the same face recognition task. “A2017b” is a resnet based CNN with a specialized loss function [20].

Comparing CNNs neural network algorithms with various human experts in face recognition task. “Examiners” and “Reviewers” are trained specialists in face forensics, often employed by law enforcement. Super-recognizers are un-trained, but exhibited a high degree of capability for the task. Students were randomly selected [2].

Humans often perform better at face recognition under many conditions that challenge the best algorithms, including recognizing faces under extremes of illumination and non-frontal poses. Also, SOTA algorithms continue to have higher error rates for darker-skinned subjects and females. Nevertheless, the recent technological progress inspired by modern day neural networks, hype or otherwise, is a stunning achievement.

The Biometrics Market

Face recognition is just one example of biometric technique that has undergone continuous technological advancement and rapid adoption. The biometrics market as a whole encompasses the other usual suspects, the so-called “hard biometric” techniques, including eye/iris recognition, voice/speaker recognition, finger-print recognition, and signature recognition.

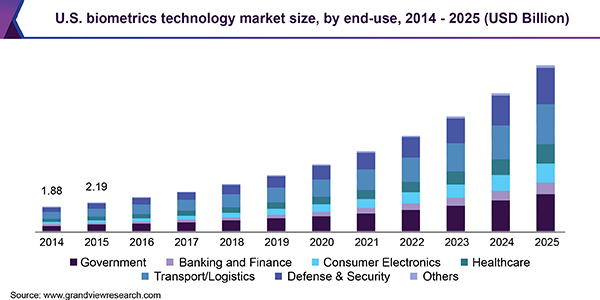

The biometric market segments include government, defense, law enforcement, banking and finance, consumer electronics, healthcare, and transportation. The graphic below shows the past and the projected market size in the US for all of these segments. If these projections are correct, in the US alone the total biometrics market will increase six-fold by 2025 from 2014.

Source: GrandView Research, Biometrics Market Report, 2019.

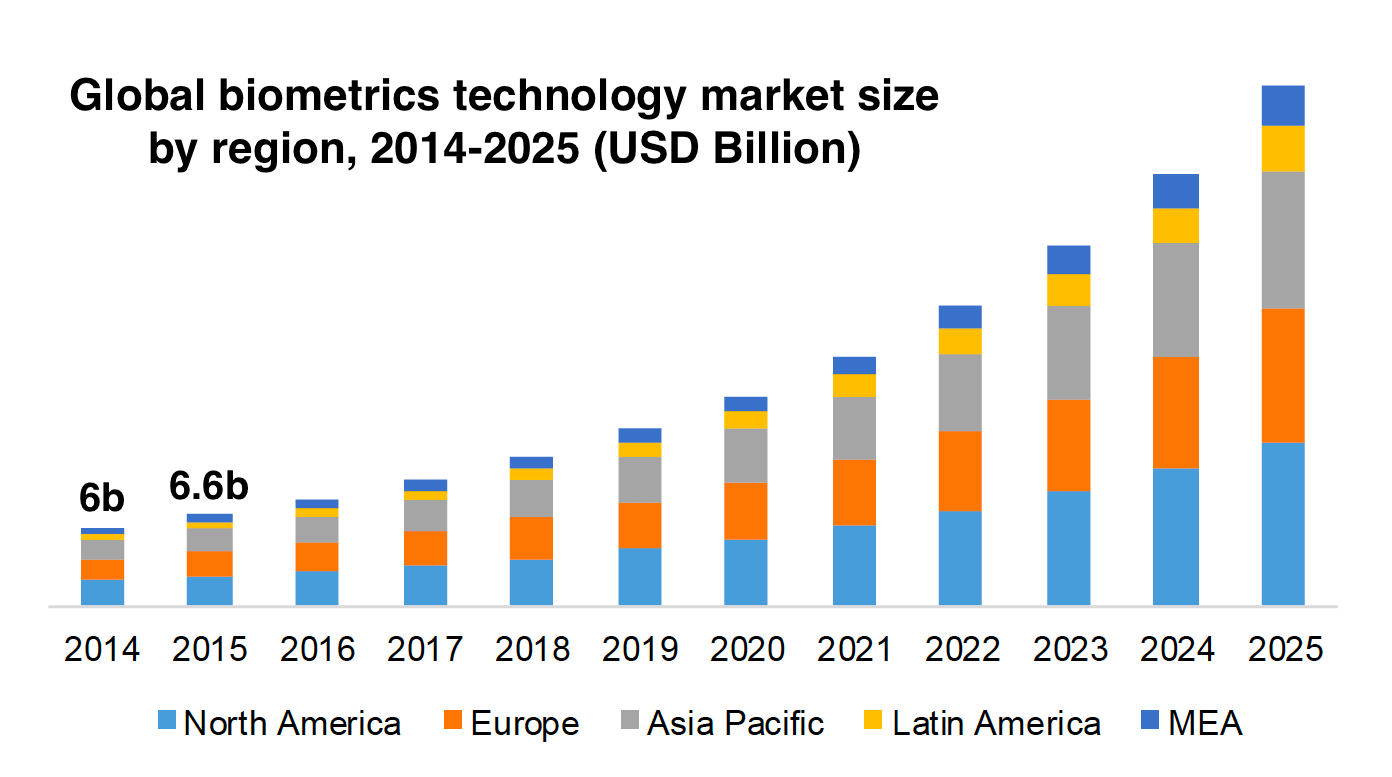

Globally, the trend is also striking. As the next graphic shows, the major international markets are the North America, China, and the UK. This is not too surprising, since the US, China, and England are leading the world in biometric deployments. Now, what might be surprising is the size and growth in developing parts of the world like Africa and the Middle East (both captured by “MEA”).

Source: GrandView Research, Biometrics Market Report, 2019.

It’s worth pausing a moment to consider the level of broad adoption and commoditization around biometrics that we are seeing today. A decade ago, few would have predicted the scale, the global impact and the ubiquity of biometrics. Many of us now routinely authenticate to our devices using our fingerprint or face and don’t think twice about it. Biometrics have blended into our lives, so it’s easy underestimate the tech’s pervasiveness.

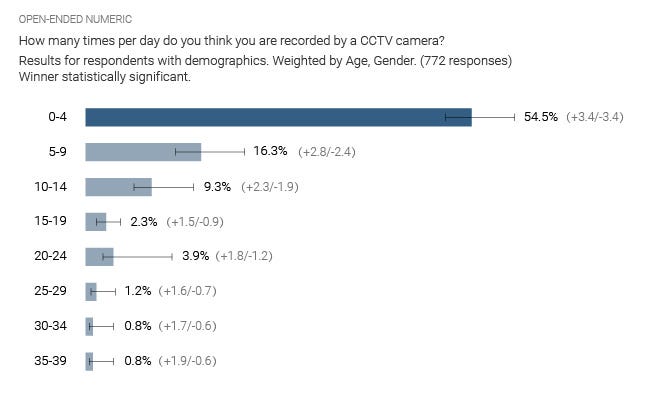

In a related study, people were asked to estimate how often they were recorded on surveillance cameras. The results of that study are shown below. Most people thought its 10 times or less.

Source: Reolink.com [19]

It’s actually a lot more. Especially if you live in an urban area, such as dense city. For example, a Londoner will likely appear on 300 cameras per day, and in major cities in the US, its around 75 times a day [19].

Of course, not all of the cameras pointed at you are going to recognize you, but in many areas of the world- identifying and tracking people is already deeply intertwined with surveillance [28]. Countries like China and India are deploying mass surveillance and collecting the biometric data of all it’s inhabitants.

Brave New World: Science Fiction No Longer

Source: “Minority Report,” Dir. Steven Spielberg, 20th Century Fox, 2002.

Science fiction has been warning us for decades. Consider the popular movie “Minority Report” (2002). It depicted a future where, for example, as soon as you walk into the mall, you are identified and instantly bombarded with personalized advertisements.

Source: “Demolition Man,” Dir. Marco Brambilla, Warner Bros, 1993.

In “Demolition Man” (1993) we saw a precursor to China’s social credit system. In that dystopian view of the future, the State continually listens to what you say. As soon as you utter any profanity, a nearby kiosk instantly spits out a ticket with a heavy fine.

These movies were inspired by science fiction stories that go all the way back to the 1930s. At the time the movies’ release, actual biometric technology wasn’t even close to the fictional version. So the depictions were farcical, and therefore comical. Now, the ideas don’t seem so far-fetched. This is the brave new world we live in.

It is said that technology is amoral, in the sense that it is not inherently either good or bad. It is really up to it’s creators to apply the tech for good purposes, or abuse it for malicious purposes. We are seeing this play out on a grand scale with biometrics.

I will now highlight a few things I see across that spectrum-starting with the good, then the bad, then the ugly.

Biometrics: The Good

Password Replacement at the Edge Biometrics is now often offered as a replacement for password-based authentication on most mobile devices and laptops, and for many apps like banking. There are a lot of issues with passwords — such as users choosing easy-to-guess passwords, or conversely, systems requiring complex passwords that are hard to remember. The FBI is recommending that all authentication systems incorporate biometrics because password-based and even multi-factor-based systems are vulnerable to attack [29].

Democratization of Algorithms The deep learning revolution was not started at a company, but rather in a few research labs. This means that many algorithms are in the public domain, detailed in papers that anyone can read and implement. This open endeavor continues to this day, and has amplified even further — now many research universities and industry labs contribute to the state-of-the-art. The best algorithms for face recognition, for example, were published and presented at conferences just in the last few years. I believe that rapid progress in this area could have never occurred if it were a pure commercial venture. It’s not clear how long this open-ness will last. Even Google has filed patents for fundamental techniques in machine learning[3].

Democratization of Software All of this open research is matched by a lot of open-source software activity. Many deep-learning research papers now also include a TensorFlow or PyTorch open-source software implementation, that is free to download and can be used by anyone [4,5]. This, combined now with now common-place public cloud infrastructure such as Azure, Google Cloud, and Amazon AWS, has created a burgeoning turnkey services eco-system (AI-As-A-Service, Machine-Learning-As-Service, Biometrics-As-A-Service, etc.). You do not need to hire a team of researchers, nor own any computing equipment, in order to deploy state-of-the-art biometric solutions.

Democracy Enabler Besides facilitating e-commerce transactions ( Face ID, Voice Pay, etc ), several governments around the world are considering biometrics as a way to allow citizens to vote. In many parts of the developing world, getting to a location to register or to vote can be extremely difficult. If done right, this could be a democracy game changer in many countries [7].

Biometrics: The Bad

Questionable Application of Biometrics By Law Enforcement Many users of biometric tech don’t understand its limitations. This can create a dangerous situation. Consider a law enforcement entity with the power to arrest and detain people based solely on suspicion or light evidence. It was reported that a major police department in the US had been searching databases of mug-shots in order to find a suspect in a crime. But, the search was not based on surveillance of the criminal caught in the act, but rather an eye-witness claiming the perp looked like a celebrity. A picture of the alleged look-like celebrity was used in the mugshot database face search [8].

In related news, Amazon, which sells it’s face-recognition-as-a-service (Rekognition) to law enforcement, underwent heavy scrutiny and criticism. In a study, the ACLU used Amazon’s tech to search a public mugshot database (25,000 people), using, as the query, the faces of all 535 members of Congress. The result of the search turned up 28 false matches [23]! What’s worse, 40% of the false matches were people of color. This is evidence of the racial bias in even state of the art face recognition algorithms, as well as the databases used to train them. Google has suspended the availability of its face recognition service for these reasons [26], but recently they released a new version of their personal home assistant which includes both face and voice recognition features.

Biometric Data Security The so-called “hard biometrics” like your fingerprints, your voice, your face are “intrinsic.” They don’t change much even as you age, wear makeup, change hairstyles, change outfits, etc. This is why hard biometrics are a highly effective way to identify an individual. But what if the data is stolen from your mobile device or from a server in the cloud? That data could then be used to authenticate to services without your approval. You can’t change your biometrics, like you can change a password. Given the sheer number of high-profile data breaches we’ve seen recently, we should all be very concerned about how institutions are protecting our biometric data if they have it.

Biometric Data Sharing Facebook’s Cambridge Analytica fiasco revealed to everyone the level of sophistication and the scale of the data sharing economy that has been operating for many years, invisible to online users. Algorithms are currently being used to recommend products and services to you, based on previous purchases, views, and clicks. This data is bought and sold in the lucrative data market. But what about your biometric data? Has that also been sold to third parties without your consent? Certainly, if any company believes that your biometrics data can also predict your purchase preferences, your data becomes indeed as “valuable as oil.” Conversely, your biometric data could also be used to restrict you from opportunities, because you don’t fit a predictive model.

Biometrics: The Ugly

Mass Surveillance and Tracking I think the issues around biometric data hacking and surreptitious data sharing stems from a basic fact: biometric data is very personal information and users are now starting to feel violated when that data is stolen, or when it’s being used without consent. Mass surveillance and tracking takes this to a whole new level, because the privacy violation is now being perpetrated by a government.

Many believe that mass surveillance, if used judiciously, is just another tool to protect citizens. Consider the case of the Boston Marathon bombers who were caught because the police were able to stitch a timeline of events based on surveillance footage. I have no doubt it can be an effective tool to spot terrorists, intruders, burglars, thieves, arsonists, and other criminals.

On the other hand, mass surveillance could be used to enforce certain behaviors that the state deems proper or improper, beyond just criminal activity. For example, consider the use of surveillance in China’s “social credit system.” There have already been reports that mass surveillance has been used as a suppression technique against a muslim minority class in certain parts of the world[6]. Fortunately, privacy advocates in the country are pushing to curtail the government’s use of mass surveillance tech [27].

Until we have the right regulation and consent framework in place, I believe that the adoption of mass surveillance is too much of a slippery slope for democratic countries like the US, countries that guarantee civil liberties and certain privacy rights.

This may actually be an opportunity for tech companies to be the ethics leaders in this space. One biometrics+surveillance company, in particular, Blink Identity, is notable in this regard. As the CEO has stated recently, “We believe the key is that [identity technology] solutions must be transparent, the vendor must be accountable, and participation must be voluntary.” [21]

AI Synthesized Media At this point, we’ve all seen humorous video clips of face swapping or augmented reality apps that place hats or masks on video participants in real-time. New deep learning-based techniques, such as generative adversarial networks, are helping to take this kind of video manipulation to the next level. Anyone can now craft artificial videos showing, for example, a politician say something they never actually said, or making it look like a CEO did something that they actually never did.

These are deepfakes. They are a problem now, they are easy to generate with open-source tools, and they are becoming more realistic and convincing every day. Fortunately, a deepfake has not yet been directly responsible for a missile launch or tanking a stock market. But perhaps just as troubling as possible global catastrophe, deep fakes have already been used as an instrument of slander and misogyny. Deeptrace has been tracking the dissemination of deepfakes on the internet and the problem is growing at an alarming rate. There are twice as many deepfakes now compared to just a year ago [21].

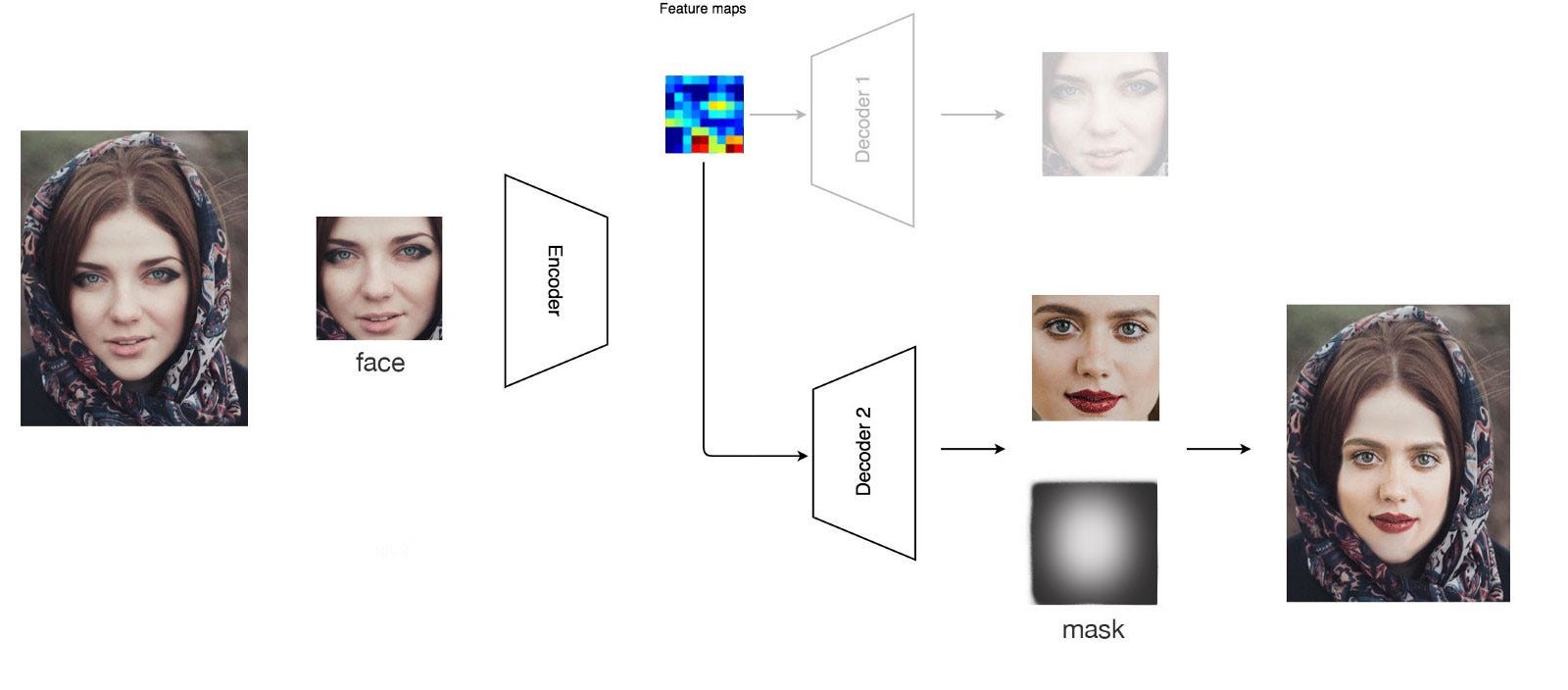

Recent research demonstrated how this GAN technique can be used to spoof face recognition systems. The researchers face swapped one user onto a different user, in a video. The latter video was presented to a face recognition system that was based on two different convolutional networks (VGG and FaceNet.) The system was not able to detect the fake and instead authenticated the original user [24].

Where do we go from here?

Fortunately, there is a lot of work already underway to deal with many of these challenges and issues. Some of them are technical, and some are related to policy and regulation. Here are just some examples of ongoing efforts:

Liveness Detection This is an anti-spoofing technique to ensure that a real human is presenting themselves to a biometric system, and not, for example, an image of a fingerprint or face. There are many techniques that range in sophistication. For example, the authentication system could guide a user to move their head in a particular direction. More complex systems look for artifacts that reveal spoofing, such as differentiating between the texture of actual skin and that of the paper a spoof photo is printed on [17]. Both the IPhone and Google’s Pixel now use depth sensing technology to prevent image-based spoofing.

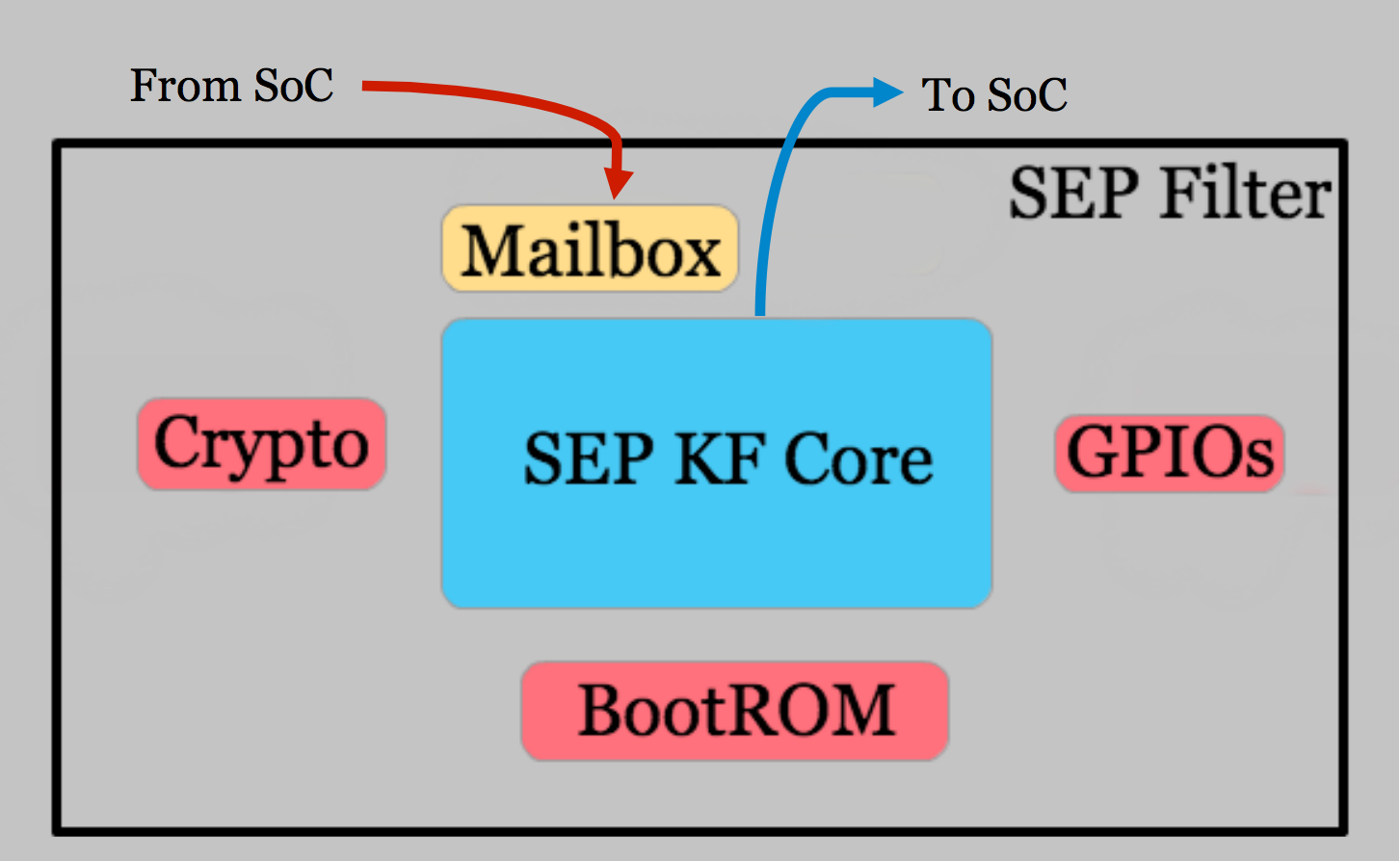

Secure Silicon A few mobile platforms like Apple have decided not to back up biometric data to the cloud. Rather the sensitive data remains in the device and is processed within a highly secure area in the device’s chipset, called the secure enclave. The secure enclave is extremely tamperproof [18]. ARM-based chips having something similar, called the TrustZone. And Google Pixel phones have an entirely separate tamperproof chip called the Titan M.

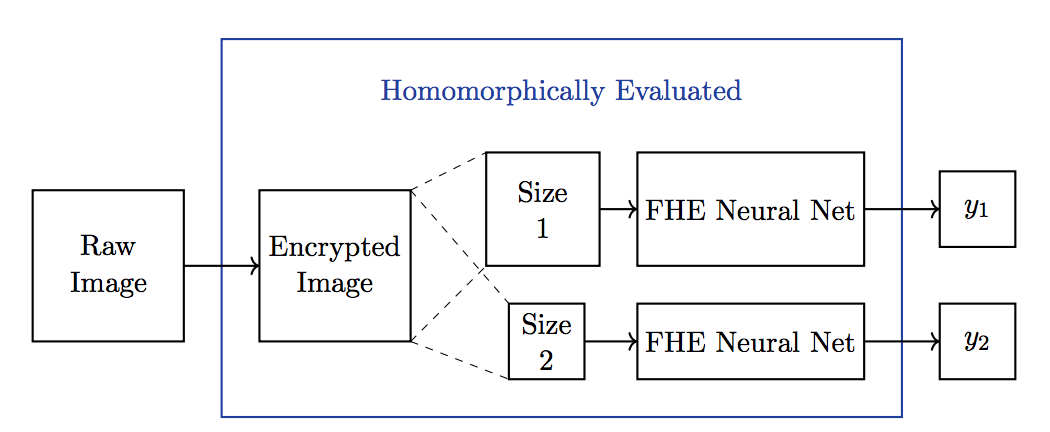

Privacy Preserving Encryption In cases where biometric data must be sent off the device to the cloud, researchers have devised an encryption scheme that can maintain privacy. Sometimes called “private encryption,” the idea is that data is encrypted using a special technique called “homomorphic encryption.” The receiver of this biometric “ciphertext” can still perform operations directly on it, without having to decrypt it. The result of the “homomorphic” operation, such as biometric verification or identification, is returned back to the sender. The entire process never reveals the original biometric data because it was never decrypted. At least one company has already deployed a product based on this technique for voice/speaker verification [9].

Federated Learning Let’s say biometric data is needed to train a deep learning-based model. Instead of pooling all that biometric data in one place and then training on it, it’s possible to distribute the training to the edge devices in a decentralized way. In this case, the “learners” at the edge contribute their trained weights to the master neural network, at a central data center. This method of training a model is called federated learning, and it avoids the situation of having to store all that biometric data in a centralized database in cloud, which would be an attractive target for hackers [11].

Ensembles of Soft Biometrics The so-called hard biometrics have evolved to have very low false positive errors. For example, Apple’s FaceID claims a one a million error rate [16]. But, there are times when traditional hard biometrics can not be used. Some researchers have demonstrated that the right collection of “soft biometrics” could be just as discriminating as 1 hard biometric. In cases where the hard biometric is not available or fails, the right fusion of soft biometrics could pick up the slack [11]. Soft biometrics include eye color, hair color, body art, and includes “behavioral biometrics” like walking style(gait), gesture style(body language), how one types at a keyboard, and many others.

Detecting Deep Fakes Soft biometrics have adopted another role recently, and that is in combatting deepfakes. The idea here is that it’s extremely difficult to incorporate many of the unique traits of a person in the production of that person’s fake video. For example, consider someone who has nervous tick, or a unique way they gesture with their hands when they speak. A face-swap alone isn’t going to be enough to complete replicate a person digitally [12].

Google announced recently a comprehensive dataset of deepfakes to help researchers develop and test methods to detect them [25]. Other industry groups, including Microsoft and are sponsoring data science challenges around detecting fakes.

Large-Scale Regulation The GDPR ( General Data Protection Regulation ) has already gone into effect in Europe, and it holds organizations and companies highly accountable for how consumer’s data is used and protected, and that may encompass biometric data soon [13]. California will have a similar policy in place, called the CCPA ( California Consumer Privacy Act ) [14]. The Illinois’ Biometric Information Privacy Act (BIPA) requires companies to get consent prior to collecting biometric data for commercial purposes. Recently, Facebook was fined millions of dollars due to violations of BIPA. The city of San Francisco has already banned the use of face recognition by law enforcement, and other cities are considering the same action. There are several proposed bills that are specifically targeting those who craft deepfakes for malicious purposes, both in California and on Capital Hill [15].

ODSC West 2019: Panelists

We will cover a lot of these topics and more at the ODSC West 2019 panel by the same name. Come by our session on Nov 1st, at 11am and hear from the following experts.

Michael Gormish is Head of Research at Clarifai. His team works on bringing image and language understanding to Clarifai’s platform. Recent projects have invovled custom face recognition, object detection, tracking, visual search, and image classification. Previously he was responsible for two search engines which connected images from mobile phones to AR experiences. Work at Ricoh included considerations of document and image authenticity including precursors to blockchain technologies. His research as a computer vision scientist included inventing algorithms used in video games, digital cinema, satellite and medical image acquisition. He led several aspects of the JPEG 2000 standardization and provided key inventions used in photocopiers, digital cameras, tablets and imaging services. Michael was named Ricoh Patent Master for being a co-inventor of over 100 US patents. He earned a PhD degree in Electrical Engineering dealing with image and data compression from Stanford University and has served the research community as an Associate Editor of the IEEE Signal Processing Magazine, and the Journal of Electronic Imaging. Currently he is interested changing the world via image understanding.

Mark Straub is the CEO and Co-founder of Smile Identity, a leading provider of digital authentication and KYC services across Africa. Smile’s facial recognition SDKs and ID verification APIs enable banks, telecoms and fintechs to confirm the true identity of any smartphone user with just a Smartselfie™. Previously, Mark led the Khosla Impact Fund, with investments in payments, solar, lending and ecommerce across Africa and India. He began his career in investment banking and venture capital in Silicon Valley at Bank of America and then Draper Fisher Jurvetson. He is passionate about the power of technology and entrepreneurship to transform emerging markets.

Hyrum Anderson is the Chief Scientist at Endgame, where he leads research on detecting adversaries and their tools using machine learning. Prior to joining Endgame he conducted information security and situational awareness research as a researcher at FireEye, Mandiant, Sandia National Laboratories and MIT Lincoln Laboratory. He received his PhD in Electrical Engineering (signal and image processing + machine learning) from the University of Washington and BS/MS degrees from BYU. Research interests include adversarial machine learning, large-scale malware classification, and early time-series classification.

Giorgio Patrini is CEO and Chief Scientist at Deeptrace, an Amsterdam-based cybersecurity startup building deep learning technology for detecting and understanding fake videos. Previously, he was a postdoctoral researcher at the University of Amsterdam, working on deep generative models; and earlier at CSIRO Data61 in Sydney, Australia, building privacy-preserving learning systems with homomorphic encryption. He obtained his PhD in machine learning at the Australian National University. In 2012 he cofounded Waynaut, an Internet mobility startup acquired by lastminute.com in 2017.

References

1. “FVRT 2006 and ICE 2006 Large-Scale Results,” Jonathan Phillips, et.al., NIST, March 2007.

2. “Face Recognition Accuracy of Forensic Examiners, Super-Recognizers, and Face Recognition Algorithms,” P. Jonathon Phillips, et.al., PNAS, June 12, 2018.

3. “System and Method For Addressing Overfitting in a Neural Network,” Geoffrey Hinton, et.al., US9406017B2.

4. TensorFlow, http://www.tensorflow.org.

5. PyTorch, http://www.pytorch.org.

6. “China’s Hi-Tech War on its Muslim Minority,” Darren Byler, TheGuardian, April 11, 2019.

7. “UN Commision Exec Calls for African Nations to Focus on Civil Registration for Development Gains,” Chris Burt, BiometricUpdate, Oct 21, 2019.

8. “NPYD used Woody Harrelson Photo to Find Lookalike Beer Thief,” Michael R. Sisak, AP, May 16, 2019.

9. http://privateBiometrics.com

10. “Bags of Soft Biometrics for Person Identification,” Antitza Dantcheva, et.al., Multimedia Tools and Applications, January 2011.

11. “Federated Learning: Collaborative Machine Learning without Centralized Training Data,” Brendan McMahan, et.al., April 6, 2017.

12. “Protecting World Leaders Against Deep Fakes,” S. Agarwal, H. Farid, Y. Gu, M. He, K. Nagano, and H. Li., Workshop on Media Forensics at CVPR, Long Beach, CA, 2019.

14. https://oag.ca.gov/privacy/ccpa

15. “Malicious Deep Fake Prohibition Act of 2018,” S.3805.

16. “About Face ID Advanced Technology,” https://support.apple.com/en-us/HT208108

17. BioID Liveness Detection, https://www.bioid.com/liveness-detection/

18. “Security enclave processor for a system on a chip,” US8832465B2.

19. https://reolink.com/how-many-times-you-caught-on-camera-per-day/

20. “L2-Constrained Softmax Loss For Discriminative Face Verification,” Rajeev Ranjan, et.al., arXiv:1703.09507.

21. https://deeptracelabs.com/resources/

22. BiometricUpdate.com interview with Mary Haskett, CEO of BlinkIdentity, https://www.biometricupdate.com/201910/blink-identity-ceo-on-preserving-privacy-in-a-facial-recognition-ticketing-system

24. “Vulnerability of Face Recognition To Deep Morphing,” Pavel Korshunov, ICBB, 2019.

25. https://ai.googleblog.com/2019/09/contributing-data-to-deepfake-detection.html

26. https://www.blog.google/around-the-globe/google-asia/ai-social-good-asia-pacific/

29. https://info.publicintelligence.net/FBI-CircumventingMultiFactorAuthentication.pdf