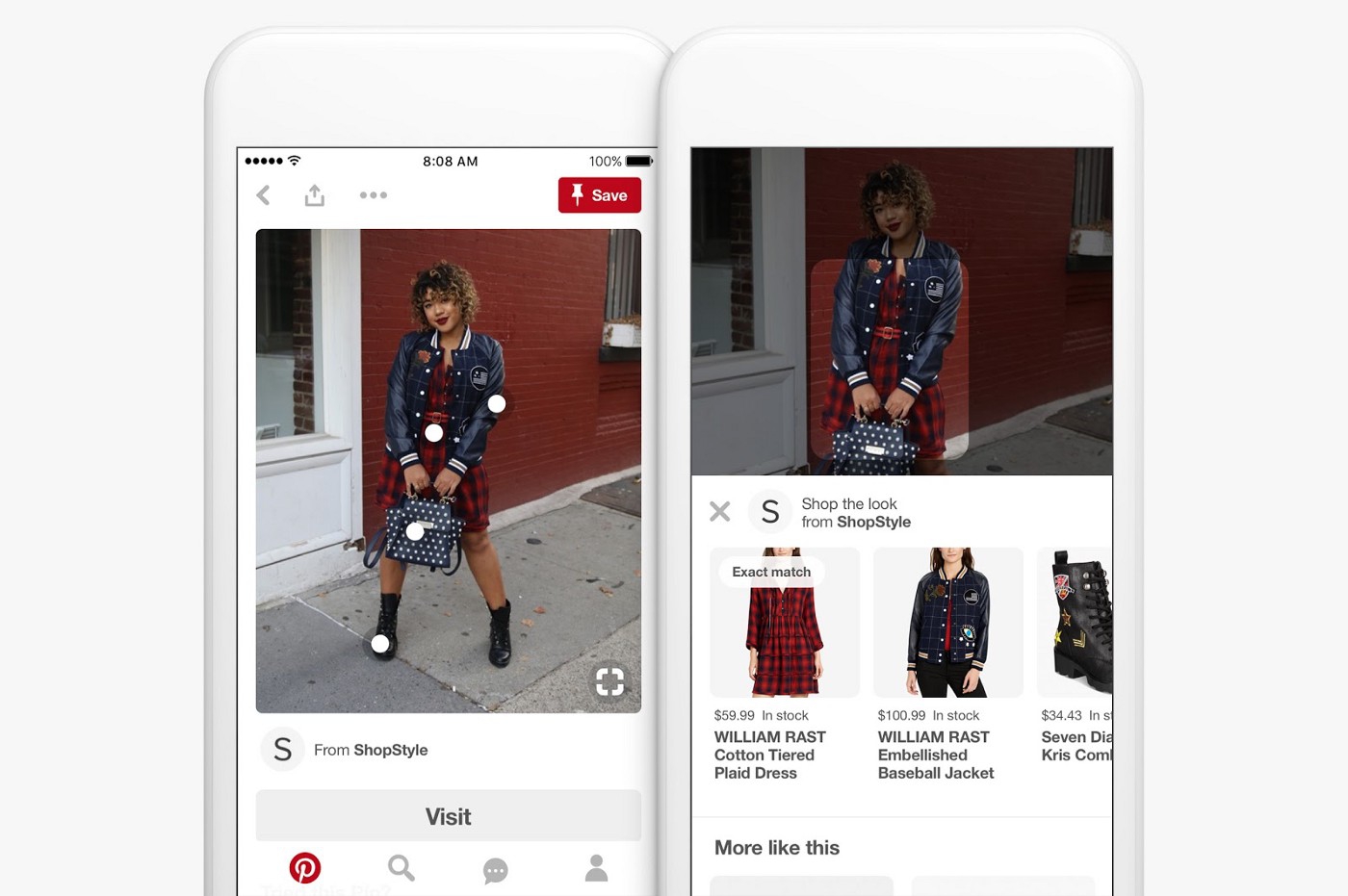

In our previous blog post, we started to look at many of the use cases for visual search. For example, you can get instant product recommendations just by snapping a pic of something you like. Several mobile apps have this feature, such as ones from Google, Amazon, eBay, Facebook, Pinterest, WayFair…the list goes on.

Pinterest “Lens Your Look” App

You may have been wondering how these services work. If you guessed that recent advances in machine learning are driving this, you would be right. But that’s just part of the story. These days, a typical on-line store the size of Amazon or eBay serves millions of users, offering billions of products. From just a photo, they can search across an enormous inventory and instantly return the top matches. It’s a masterful feat of engineering that lies at the intersection of cutting edge machine learning, distributed computing, and dedicated hardware.

In this blog series, we’ll dive into the technical challenges of visual similarity search that must operate at web scale. Many of the players in this space have blogs or publish their research, thus offering a glimpse at how they implement visual search on such a massive scale. We’ll showcase some of those examples, and, later on, introduce our own tech that enables billion-scale visual similarity search.

The series will be composed of the following blogs:

- • Machine Learning In Visual Search

- • A Picture Is Worth A Thousand…Numbers

- • Big Data, Make Room For Big Vectors!

- • Embedded Embeddings: New Hardware For Visual Search

In the remainder of this article, we’ll take a sneak peek at those upcoming blog posts.

Machine Learning In Visual Search

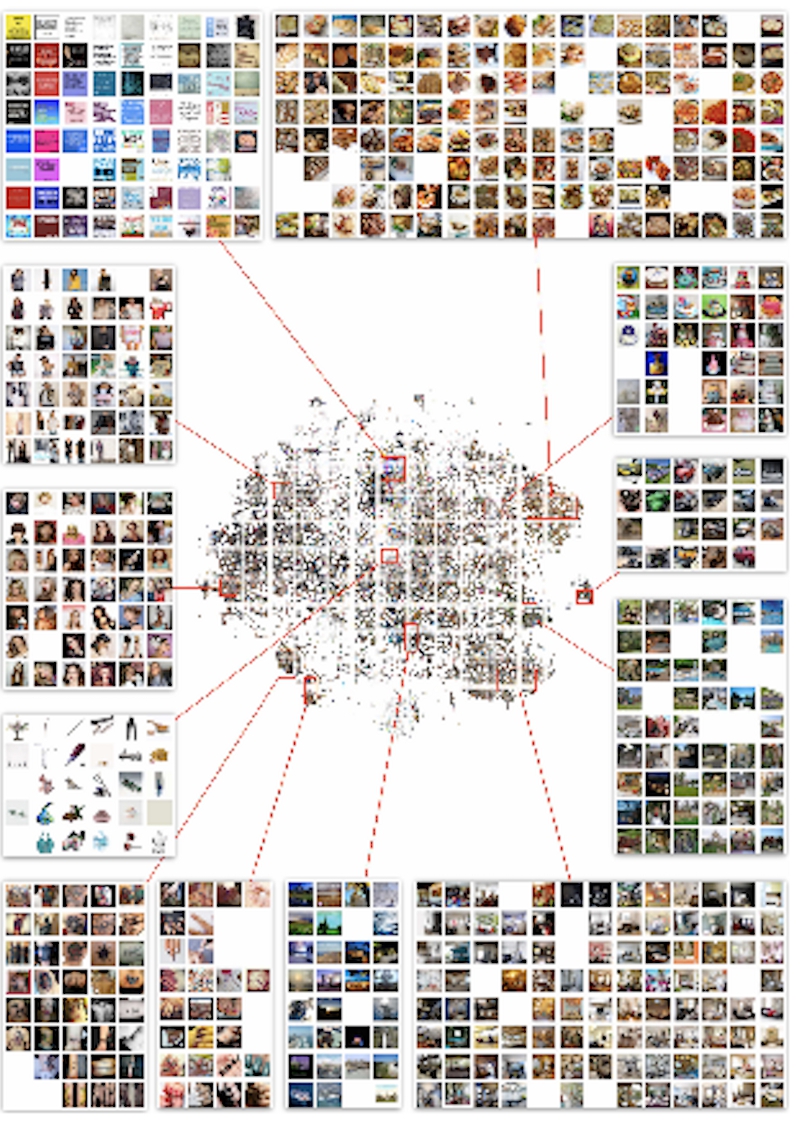

In the blog post, “Machine Learning In Visual Search,” we’ll cover some machine learning background that’s required for the rest of the series. We’ll showcase some successful use cases that combine machine learning within visual similarity search. For example, the combination has enabled eBay to automate product categorization for its sellers. And at Pinterest, the marriage of machine learning and visual search has significantly improved on-line recommendations, driving more user engagement by almost 50%.

A few image clusters generated by Pinterest’s visual recommendation engine.

A Picture Is Worth A Thousand…Numbers

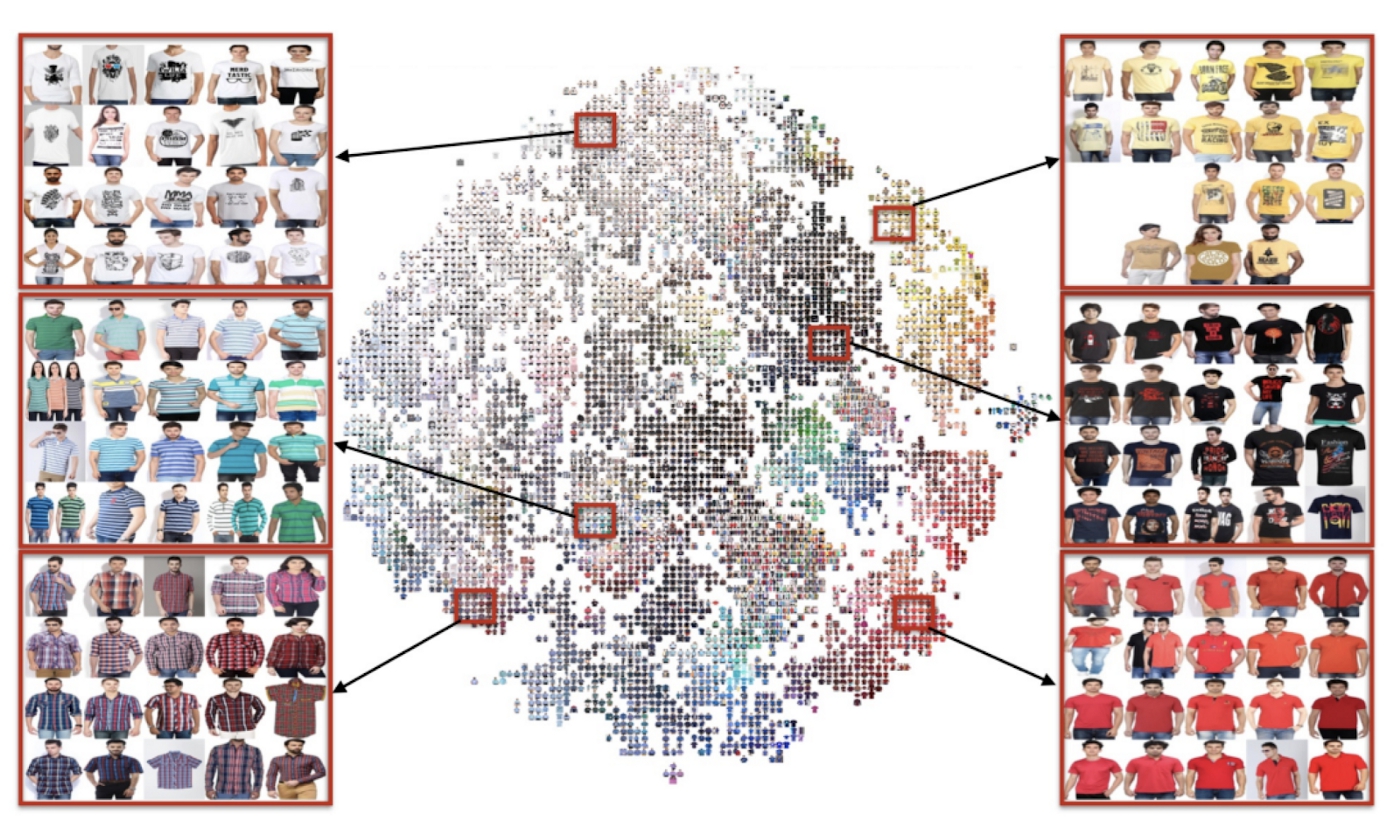

In the blog post “A Picture Is Worth A Thousand…Numbers,” we’ll focus on machine learning algorithms based on deep learning. Deep learning is amazing at extracting concise and meaningful information from images. Through a technique called distance metric learning, deep learning can transform any image into a compact, information rich vector of numbers. We’ll look at how WayFair and Flipkart use this technique for visual similarity search within their massive product catalogs.

Flipkart visual similarity clusters for several shirts based on vector representations of product images.

Big Data, Make Room For Big Vectors!

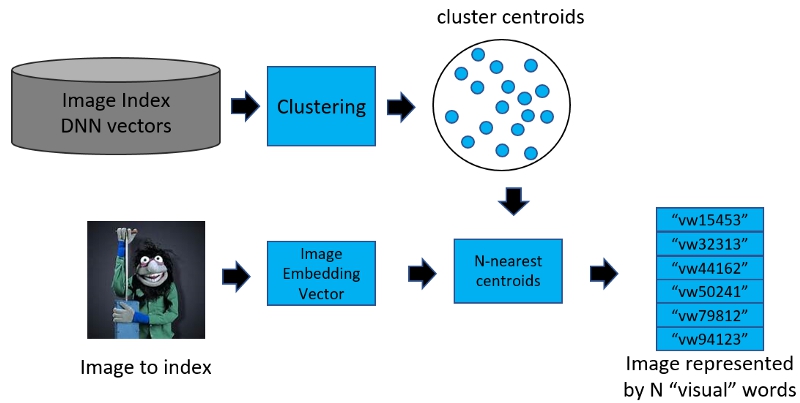

A database of images, even if each one is represented by a compact numeric vector, could easily run up into the billions. How do you efficiently store and quickly search across such a massive dataset ? Traditional large scale storage systems based on SQL or NOSQL aren’t designed for this kind of workload. In the blog post “Big Data, Make Rom For Big Vectors!”, we’ll get into the nuts and bolts of Big Vector storage and computing. While some have proposed hybrid systems that augment existing Big Data techniques, others have started from scratch. We’ll highlight how Facebook and Microsoft are tackling this problem. They’ve developed highly customized mathematical algorithms that optimize visual similarity search on GPUs.

Microsoft’s architecture for fast similarity search on image vectors.

Embedded Embeddings: New Hardware For Visual Search

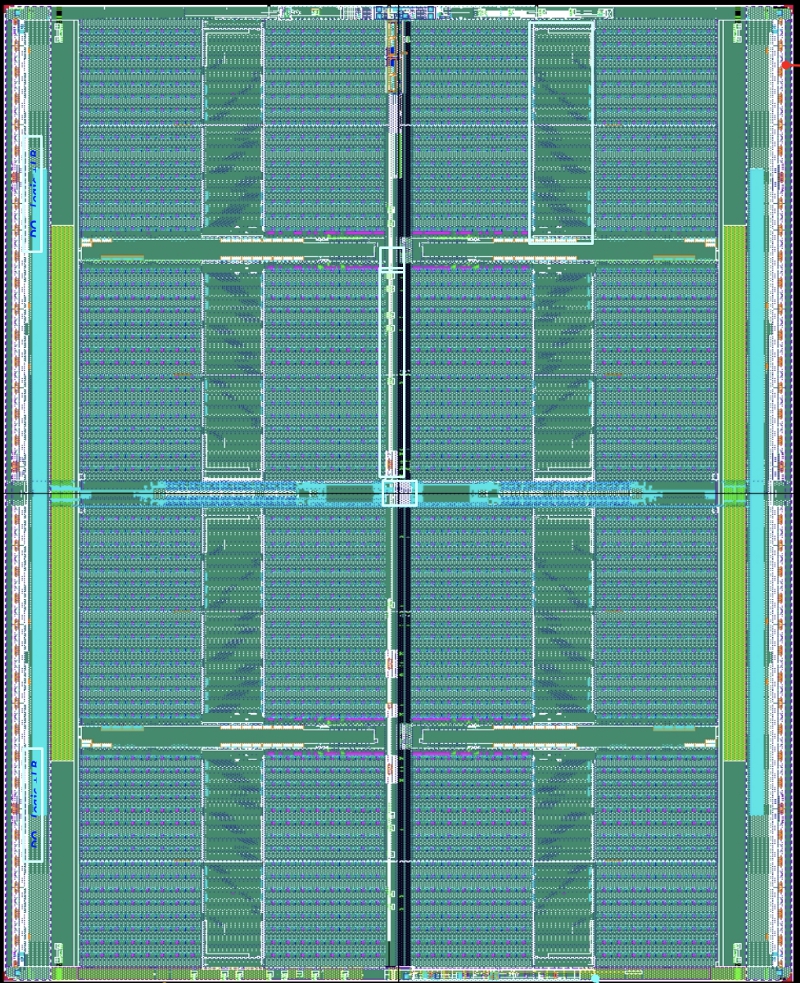

But are GPUs the only answer ? Adding more power-hungry GPUs to handle ever growing product catalogs may not be the best way to go. As a new breed of dedicated AI chips hit the market, the alternatives for low-power and fast computational search are growing. In the blog, “Embedded Embeddings: New Hardware For Visual Search,” we will showcase our own in-memory, bit-processor technology that delivers on key billion-scale similarity search benchmarks, including latency and power.

A close-up view of our multi-core bit processor technology that tightly integrates memory and compute.

Author: George Williams